How can something that looks perfectly innocent to humans completely confuse computer systems?

Welcome to the world of adversarial attacks, where a strategically placed advertisement can make your car accelerate uncontrollably.

Fooling face recognition systems

In 2018, the photo of Dong Mingzhu, “one of the toughest businesswomen in China”, together with her name, was posted by the police on a “name and shame” screen at a train station.

The local police, in Ningbo, south of Shanghai, used face recognition software to identify her as a jaywalker.

Only she wasn’t there.

And it would become quite obvious to anyone who paid attention to the “name and shame” screen. It was her photo, in an advertisement, on a bus that was driving past. The real-time “name and shame” system, using a facial detection and recognition system, couldn’t tell a difference between a real human, and a photo advertisement.

It might seem innocent. But these mistakes could have serious consequences for individuals. And the scale of such systems is growing. Some intersection cameras in China name and shame thousands of jaywalkers a month.

The case of Dong Mingzhu was quickly spotted, because of the open nature of the system: immediately displaying its outcomes for everyone to see. But what if the system did not put the photo on the public display? What if, instead, the system triggered an automated action to update the Social Credit score and limited Dong’s ability to travel?

Fooling speed cameras

Did you know you could avoid getting a speeding ticket by adding a sticker to your numberplate?

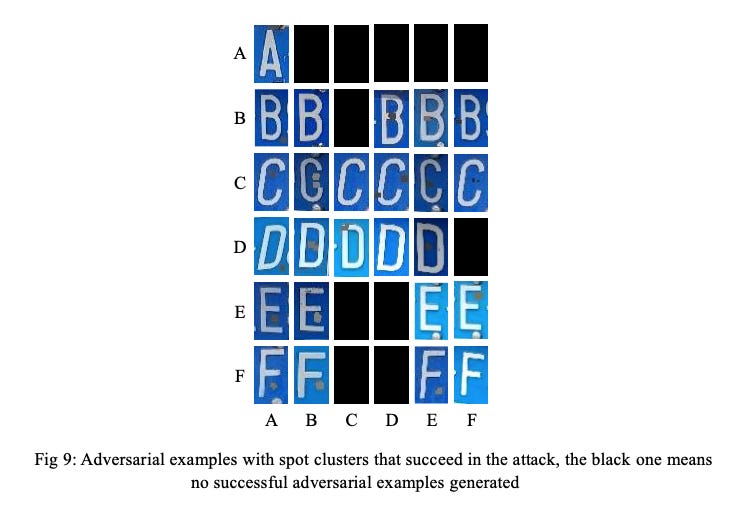

Last year, a paper on adversarial attacks against licence plate recognition systems was published on arXiv. The authors claim they can “fool” a certain licence plate recognition system, with success rate of 93%, by simply placing a dark sticker (or a few stickers), on the numberplate.

Of course, tampering with licence plates is likely illegal where you live. But consider the photo, from the research paper linked above, showing how seemingly random spots around letters can get the numberplate recognition system to confuse, for example, letter F for letter A (bottom left corner of the photo). Does it look like being deliberately tampered with?

It’s a completely new level of sophistication, compared to earlier, “code injection” efforts (yes, it’s just a humorous prank).

Fooling cars

There is an entire field of research, exploring the ways to make autonomous cars perform unintended actions. An interesting work in this space is called DARTS: Deceiving Autonomous Cars with Toxic Signs.

The authors show a number of ways to deceive autonomous cars. Most of them look perfectly harmless to an unsuspecting human eye, and many of them will cause a deceived self driving car to behave in an unexpected way.

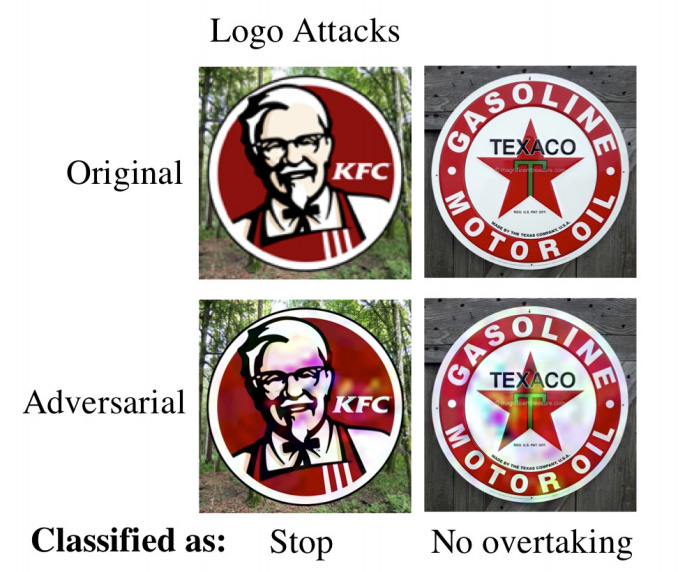

What if a food-chain sign could make your car stop? Or, what if a gas station sign could limit your car’s ability to overtake? See the two impressive examples of adversarial attacks in the image below.

Adversarial anti-virus software?

I can’t wait to see “adversarial anti-virus” approaches. Perhaps we’ll soon see patrols with cameras scanning for known cases of adversarial attacks? Or, perhaps, our automated cars will receive daily updates to their software, addressing any known adversarial attacks?

Or, just perhaps, adversarial attacks will remain a theoretical problem with no one really using them to fool our cars, or speed cameras?

As long as we build systems that can tell a real person from a photo in an advertisement, we might be perfectly fine.

How can your life, or your business, be affected by adversarial attacks? Can you think about a scenario that would be beneficial, rather than disruptive? Let me know!