“It is impossible for someone to lie unless he thinks he knows the truth. Producing bullshit requires no such conviction.”

Harry G. Frankfurt, On Bullshit

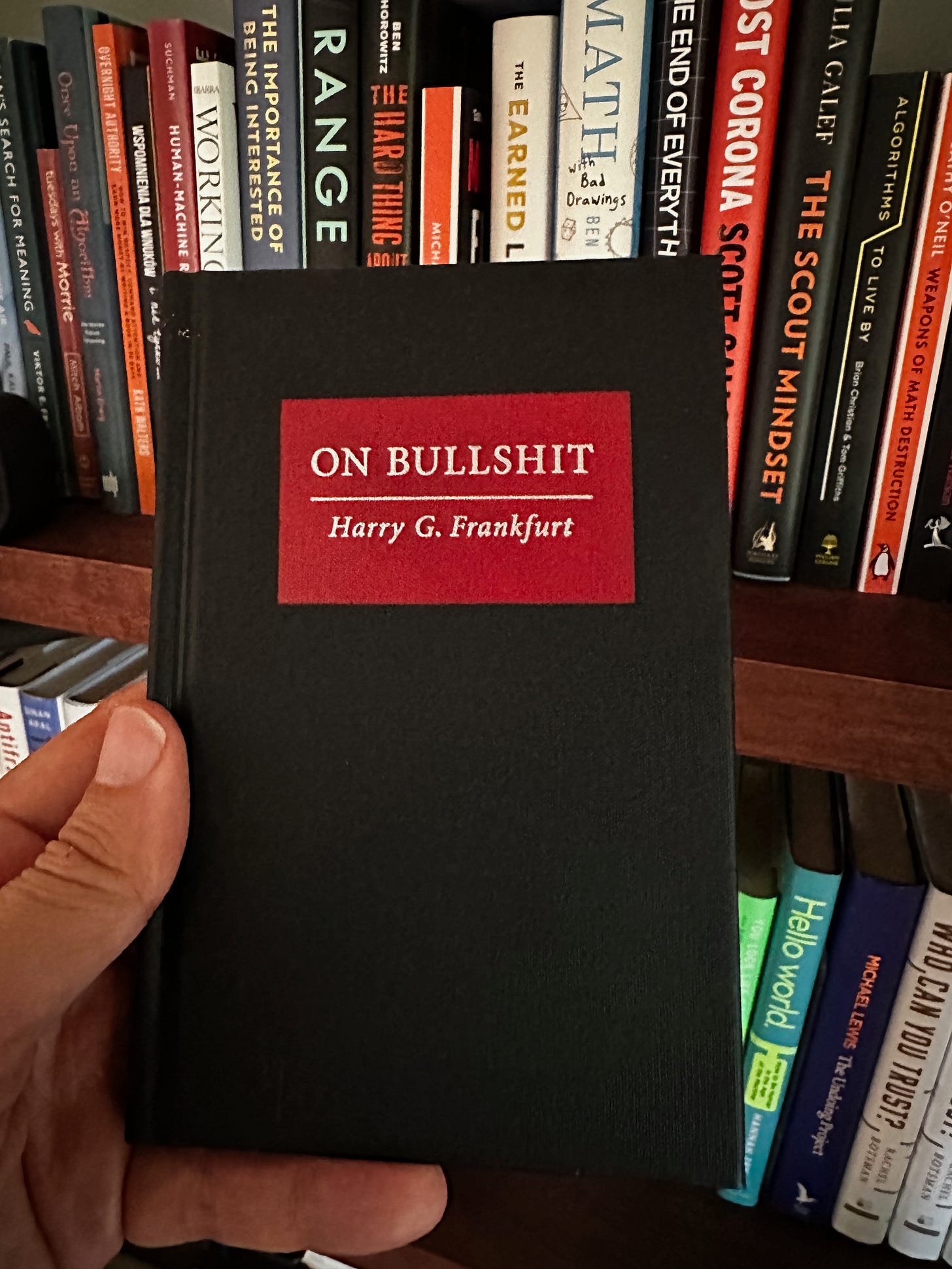

There’s a tiny book in my library. Physically, it’s roughly the size of a smartphone. Just as thin and a tiny bit wider. But when judged for impact, it could be among some of the most prominent books on the shelves. Initially emerging as an essay in a journal in 1986, it was published as a book in 2005. It was a New York Times Bestseller for 27 weeks after its release.

It’s called “On Bullshit.” Harry G. Frankfurt wrote it. It explores what bullshit is and why we produce it.

In the book, Frankfurt defines bullshit as information that is meant to persuade without regard for truth. Liers attempts to hide the truth. Bullshiters don’t care whether they spread truth or lies. To lie, you need to know the truth. Bullshitting doesn’t require such knowledge. Indeed, occasionally bullshitters will produce truth.

Are large language models producing bullshit? These sophisticated statistical machines generate probabilistic content that occasionally (and more often than not in recent LLMS) produces truth.

What is botshit?

If you use Frankfurt’s definition, there’s a problem applying it to Generative AI. On one hand, you could argue that these algorithms have no regard for truth: check. On the other hand, these large language models do not mean to persuade; their intrinsic motivation is to generate the most probable response. No check here. At least not yet.

Because bullshitting is not just the creation of content without regard for the truth but also using it to persuade others, we’re yet to see genuinely bullshitting AI. In the meantime, we’re starting to drown in botshit: a hybrid of (1) generative AI content created without regard for the truth and (2) its use by humans in persuading others.

Botshit is information created by Generative AI with no regard for truth and then used by humans (with no regard for truth either) to persuade others.

And, of course, academics are on it! A recently released paper, “Beware of Botshit: How to Manage the Epistemic Risks of Generative Chatbots”, written by Tim Hannigan, Ian P. McCarthy, and Andre Spicer, provides a typology of bullshit (pseudo-profound, persuasive, evasive, and social) as well as categories of botshit (intrinsic and extrinsic). Let’s get straight to botshit.

Intrinsic botshitting happens when humans use outputs that might be grounded in a bot’s (large language model’s) knowledge, which is untrue at the time of content generation or use. This is typically the case where a bot’s training dataset is outdated. For instance, ChatGPT used to provide outdated information about a country’s leadership. Especially if elections have happened recently. Only recently did it start to validate its content by looking up the most recent data.

Extrinsic botshitting happens when humans use outputs not grounded in a bot’s knowledge. Large language models are unlikely to answer “I don’t know” to a prompt. Ask one to write a few paragraphs about the economy of Paraguay in 2026, and it will happily create hallucinate an answer without even mentioning that it’s a speculation.

Not all botshitting is bad, mind you! Such speculations are excellent during brainstorming and scenario planning sessions, where regard for truth is secondary. But they’re highly problematic in other scenarios. What if the insight into Paraguay’s economy was used to guide investment decisions?

The paper on botshit explores this in a matrix of truthfulness importance versus truthfulness verifiability.

How to avoid botshit?

First of all, not all botshit has to be avoided. Some botshitting is welcome in an organisation, especially in creative and exploratory tasks. But then there are others in which it should be avoided. Here are some steps you should consider in avoiding botshit in your business.

Train and build awareness: Educate your teams about the nature of generative AI. Help them understand its capabilities and limitations, particularly in discerning between verified facts and speculative content.

Critically verify: Make sure your teams verify the information generated by AI. Ensure it aligns with current, factual data, especially in decision-making scenarios where accuracy is paramount.

Set clear use cases: Define and communicate where and how AI-generated content should be used within your organisation. Establish boundaries to prevent misuse or overreliance on AI for critical tasks.

Encourage human oversight: No matter how advanced AI gets, human oversight remains crucial. Ensure there’s a system in place for human review, especially for content used in external communications or strategic decisions.

Use Generative AI Responsibly: Remember that while AI can be a powerful tool, it’s not infallible. Use it as an aid, not a substitute for human creativity, particularly in complex, nuanced situations.

So, the next time you find yourself marvelling at the eloquence of a digital assistant or the depth of an AI-generated report, pause and ask yourself: are you witnessing a breakthrough in artificial intelligence, or are you knee-deep in botshit?