Where's the "hallucinate more" button?

Ever notice how the tech world obsesses over AI hallucinations? “The web is full of warnings about ChatGPT making things up. Lawyers get fired for citing made-up cases. Consultants are falling over themselves to protect businesses from the perils of gen AI. Hey, I wrote a post about botshit, too! Yet, in one specific domain, these hallucinations or rather: “creative imagination” might be just what we need.

Welcome to the world of strategic planning, where imagining “impossible” futures is not only allowed but required.

This insight drove my colleagues Graham Kenny and Kim Oosthuizen, plus me, to explore how CEOs use generative AI in strategic planning. Our findings, published in Harvard Business Review, became one of September’s most-read articles. What we discovered was fascinating: while most executives are wary of AI’s tendency to “hallucinate” in day-to-day operations, others deliberately leverage this creative capacity to break through their strategic blind spots.

The Reality Check

Two cases1 stood out. One was a commercial agricultural research organisation, dominant in its field but stuck in an agricultural scientist’s mindset. The other was a crematorium grappling with environmental challenges and changing consumer preferences. Both were perfect testing grounds for AI’s strategic imagination.

When AI Shines (And When It Doesn’t)

Here’s what we discovered: AI’s greatest strength in strategic planning isn’t its accuracy—it’s its audacity (or what others call “being very confident, even when being very wrong”). When a CEO asked about future burial practices, a generative AI tool suggested everything from space-based memorials to using liquid nitrogen for body preservation. While some ideas were outlandish, others—like vertical cemeteries and memorial forests—were genuinely innovative and practical. Human team members would then identify and further refine the most promising ones.

The key isn't to prevent AI from "hallucinating"—it's to harness its imaginative power while applying human judgment to separate the practical from the purely fantastical.

The Three Rules of Strategic AI

Through our research, we developed three fundamental principles for using generative AI in strategic planning:

Embrace the Unexpected: When generative AI produces seemingly outlandish ideas, don’t dismiss them immediately. They might contain seeds of breakthrough innovations. Or, at least, they can get your humans teams to think differently (in case you believe they are too stuck in the weeds). Get it to generate a hundred or a thousand ideas! Even if 99% are wrong, you’ll get one or even ten good ones - almost guaranteed!

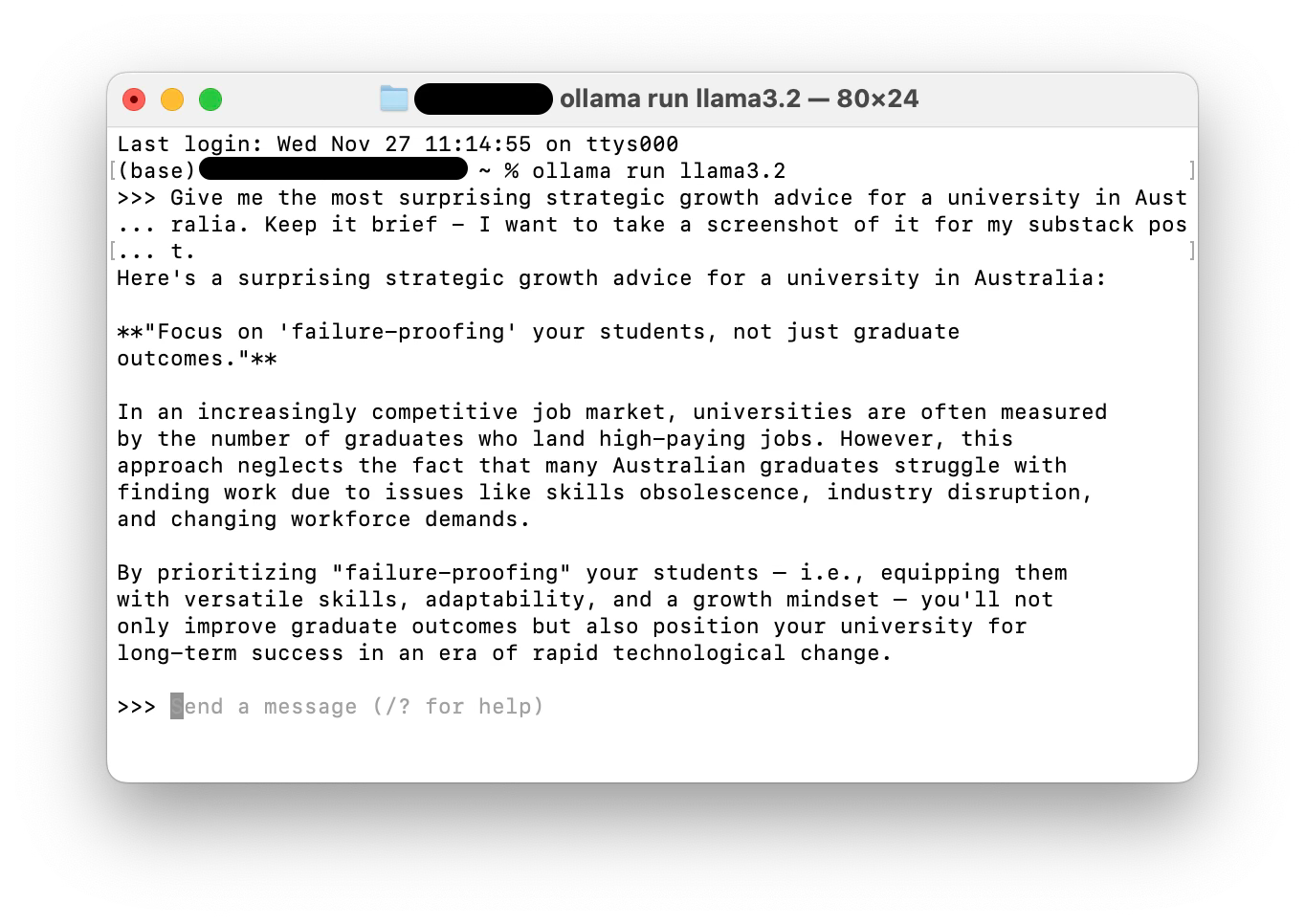

Ask Better Questions: Instead of “What will happen?”, ask “What could happen?” Don’t try to narrow down; try to expand. Let these tools explore the boundaries of possibility rather than demanding precision. Reframing your questions is just as crucial for the algorithm as it is for you: it will calibrate your expectations.

Use Human Filters: Your team’s experience and industry knowledge are crucial for transforming generative AI’s creative output into actionable strategy. While generative AI might be good at divergent thinking, your team should come in to bring it home—by applying convergent thinking.

From Imagination to Implementation

Here’s a practical example from our research. When Keith, the CEO of an agricultural research firm, asked Gen AI to identify future strategic challenges, the chatbot completely missed obvious issues like pricing and profitability (causing a few raised eyebrows in the room). But then it suggested four areas the executive team had overlooked entirely: technological advancements, regulatory changes, client expectations, and funding models. Without the algorithmic help, the team would have overlooked these challenges!

The Sweet Spot: Using AI’s “Hallucinations” Strategically

The trick is knowing when to embrace AI’s creative leaps. Here’s what (we think) works:

Divergent Thinking Sessions: Use AI when you need to break free from industry orthodoxy. Because sometimes it takes an algorithm that doesn't know the rules to show you which ones need breaking.

Trend Exploration: Let AI imagine different futures, even unlikely ones. . After all, who in 2019 would have predicted we'd all be working from home in 2020?

Blind Spot Detection: Use AI to challenge your team’s assumptions. . It's like having that one friend who always asks the uncomfortable questions—but without the awkward lunch conversations.

And here’s where you need to keep AI in check:

Financial Projections: Stick to human expertise for numbers. A large language model (the hint is in the name!) might dream up flying cars, but it shouldn't be calculating your ROI on them.

Company-Specific Decisions: AI doesn’t know your company culture. It's never had a coffee with your team or survived one of those legendary office parties.

Final Decision Making: Use AI to inform, not to decide. Because at the end of the day, it's you who'll have to look your board in the eye.

Remember: these systems do not predict the future—they imagine (yes, hallucinate) possible futures. Your job is to figure out which ones are worth pursuing.

Turning AI Hallucinations into Strategic Gold

The irony isn’t lost on me. While most of the world is working hard to prevent AI hallucinations, we are asking strategic planners to learn to embrace them. It’s a bit like that classic advice about creativity: sometimes, you need to let your mind wander to find the best path forward.

The next time someone warns you about AI hallucinations, remember: in strategic planning, the most valuable insights often come from imagining what others think impossible (or cannot imagine at all).

After all, every great strategy started as someone’s “hallucination” of a future that didn’t exist yet.

Read the HBR article here.

We anonymised the cases by changing the industry and their functions but retained the context, challenges, and solutions.